WTH is Open Service Mesh?

If your working with Kubernetes, even if you’ve only just started, you have probably heard about Service Meshes. Service Meshes allow you to add a layer on top of your Kubernetes cluster (and other orchestrators) which provides additional features for how you handle between your applications. Service Meshes were created to deal with the complexity of communication in Micro Services based applications where you have lots of small services, potentially with lots of replicas, trying to talk to each other. Instead of having to handle things like mutual TLS, access control etc. through Kubernetes or the application code, the Service Mesh provides these services.

There are lots of Service Mesh implementations out there, ranging from big players like Istio to smaller niche players. You can see a useful list of meshes using this site. Today we are going to look at a relatively new player in this area that has come from Microsoft called Open Service Mesh, which may be particularly interesting for anyone using AKS.

What is Open Service Mesh?

Back in 2019 Microsoft, along with HashCorp, Buoyant, Solo.io and others, announced a project called the Service Mesh Interface. This was not a service mesh itself, but a specification for a standard interface to all service meshes to try and get some consistency around the API’s used for Service Meshes and promote interoperability. Over the last year, this has gained some traction, with several Service Meshes implementing some or all of the SMI spec. If you’re interested in reading the SMI spec you can find it SMI | A standard interface for service meshes on Kubernetes (smi-spec.io).

Open Service Mesh (OSM) is Microsoft’s implementation of the SMI in an actual Service Mesh. This is an Open Source project and a CNCF sandbox project. OSM is intended to be a simple, lightweight Service Mesh and so focusses on providing just the features of the SMI. In particular, OSM provides:

- Mutual TLS between pods

- Fine-grained access control policies for services

- Traffic shifting between deployments

- Observability and insights into application metrics

- Easy to configure via Service Mesh Interface

Other Service Meshes like Istio or Consul provide more features than this, but they are also much more complex to deploy and manage.

It should be noted that currently OSM is under development and is not ready for production workloads. I don’t expect it will be too long before we see a GA release.

How does Open Service Mesh Work?

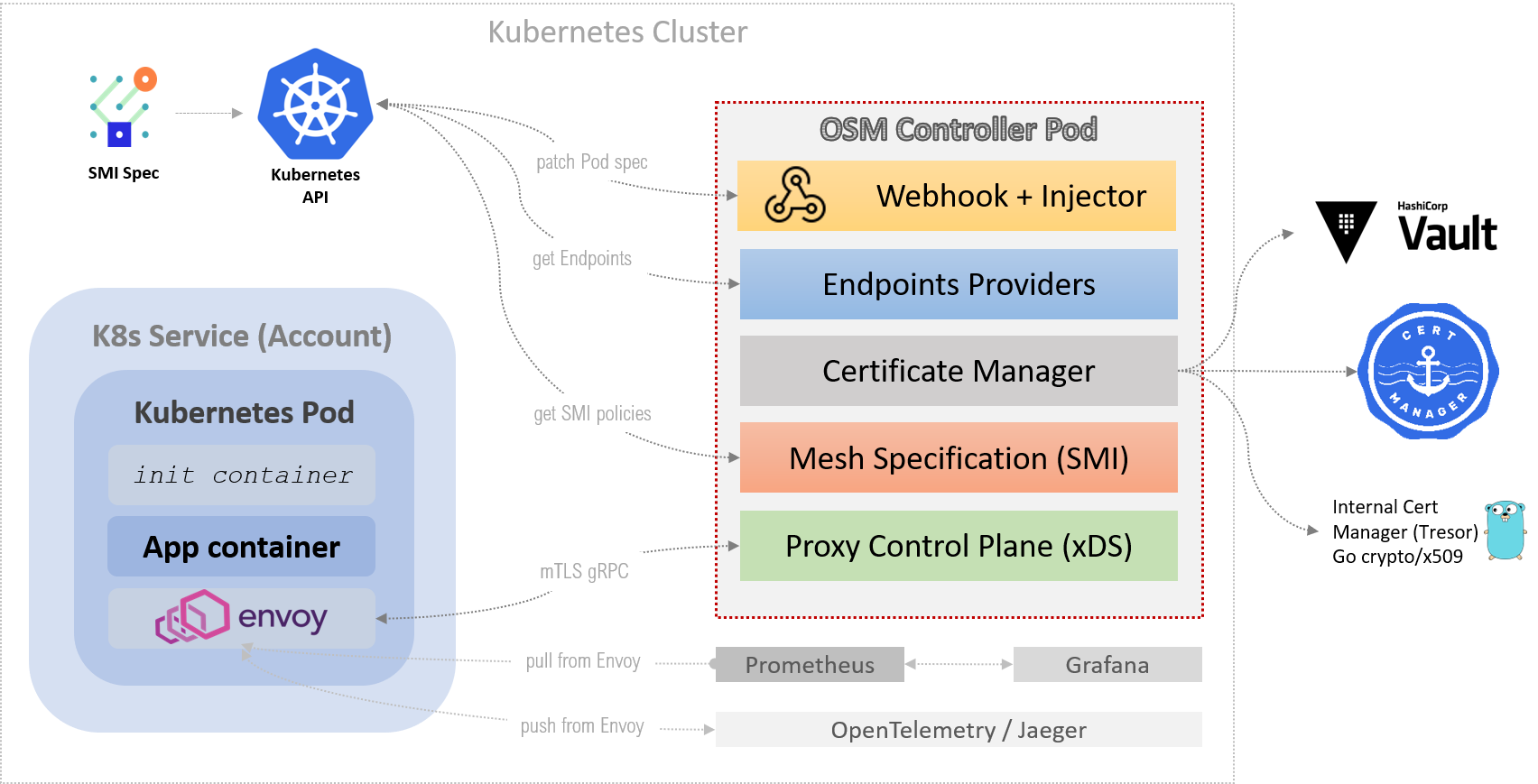

Open Service Mesh is deployed onto your Kubernetes cluster and has two key components. First, the OSM controller pod which contains the brains of OSM and secondly a sidecar container injected into any running pod which runs the popular Envoy proxy. Envoy is used by many Service Mesh implementations. In addition to this, the controller pod also has the ability to talk to external secret providers for providing certificates if you want (it is not required).

Once up and running, any requests to or from your pods are directed through the Envoy proxy. By doing this, OSM can apply any access controls or traffic routing rules to the request, as well as encrypting and protecting the traffic using mutual TLS.

For observability, OSM also provides Prometheus metrics and Grafana dashboards which you can find more details on here

Installing OSM

Currently, to install OSM, you need to use a command-line tool downloaded from GitHub. Full instructions for this can be found here. However, the process is as simple as downloading the tool, making sure you are connected to the right Kubernetes cluster in Kubectl and then running OSM install.

I would be surprised if we did not see a more automated way to install this into AKS in the future.

Once OSM is installed, you need to enable it, which is done on a per namespace basis. For each namespace you want OSM to manage you need to either run this command:

osm <namespace> add

or add a label to your namespace:

openservicemesh.io/monitored-by=<mesh-name>

Finally, you need to restart any pods in the namespace.

Setting Up OSM

In a default deployment of OSM, MTLS happens automatically with the inbuilt certificate provider. You can use an external provider if you wish.

Rules for authorisation and traffic policies can then be created as native Kubernetes objects. For example, using the object below, we can create a rule that splits traffic in a 50/50 ratio between two different versions of our application.

apiVersion: split.smi-spec.io/v1alpha2

kind: TrafficSplit

metadata:

name: my-trafficsplit

spec:

# The root service that clients use to connect to the destination application.

service: my-website

# Services inside the namespace with their own selectors, endpoints and configuration.

backends:

- service: my-website-v1

weight: 50

- service: my-website-v2

weight: 50

That is all that is required, OSM will now handle splitting the traffic and sending it to the right pods. Similar rules can be created to allow only certain service accounts access to specific resources. In the example below we are allowing two service accounts access to two specific services in our applicaiton.

kind: TrafficTarget

apiVersion: access.smi-spec.io/v1alpha2

metadata:

name: bookstore-v1

namespace: bookstore

spec:

destination:

kind: ServiceAccount

name: bookstore-v1

namespace: bookstore

rules:

- kind: HTTPRouteGroup

name: bookstore-service-routes

matches:

- buy-a-book

- books-bought

sources:

- kind: ServiceAccount

name: bookbuyer

namespace: bookbuyer

- kind: ServiceAccount

name: bookthief

namespace: bookthief

We’re not going to go into great detail on how to use OSM here, I would recommend anyone wanting to learn runs through the tutorial osm/docs/example at main · openservicemesh/osm (github.com).

Why Would I Want To use Open Service Mesh

The first question to answer here is whether a Service Mesh, in general, is useful to you. It may seem that Kubernetes and Service Meshes go hand in hand, but adding the complexity of a Service Mesh is only really useful if it provides you with some benefits. Generally, people who benefit from a service mesh will be looking for one or more of the following:

- Implementation of Mutual TLS between all pods without needing manual configuration

- Fine-grained access control for traffic between pods and services

- Traffic control for routing requests to different versions of applications, blue/green testing etc.

- Better observability of traffic between services

These are the key services provided by a Service Mesh. You will find that some more complex Service Meshes offer more things, such as service discovery, configuration stores and so on.

If you think you do need a Service Mesh, then the next question is whether OSM is the right one for you. As mentioned, there are many Service Mesh providers, and they all have benefits and disadvantages. OSM key benefits boil down to:

- A simple to deploy and manage Service Mesh

- Implements the SMI specification

- No bloat of features (at least currently), the SMI spec is the key focus

- Supported by Microsoft

- Donated to the CNCF

The last point on that list, donation to CNCF, may be a significant benefit if you are looking to ensure that your chosen Service Mesh remains Open Source and governed by the CNCF community. There has been a lot of concern of late of Google’s decision not to donate Istio and instead of creating the Open Usage Commons.

Finally, given this is a project developed by Microsoft, I would expect to see more integration with Azure and AKS, which may be beneficial if you are bought into the Azure ecosystem.

What Issues does Open Service Mesh Have

The biggest issue with OSM currently is it’s pre-release status. Most of the features are present, so now is an excellent time to get it running and familiarise yourself with how it works, but if you need to go to production this would be a blocker right now. Hopefully, we will see this go GA soon.

Another downside for some may be the number of features. Microsoft has been very clear that the initial release of OSM is focussed purely on the SMI spec and implementing just those features. If you are looking for things like service discovery, configuration storage and so on, then these are not present. This is part of the trade-off between simplicity and features. If you need these additional features, you may need to look at some of the more complex offerings like Istio or Consul.

OSM uses Envoy as the proxy between pods, but it does not use this for Ingress. Instead, users of OSM are limited to a choice of three ingress controllers:

- Nginx Ingress Controller

- Azure Application Gateway Ingress Controller

- Gloo API Gateway

If you need to use a different Ingress controller, then they may work, but are not officially supported.

Finally, if your application requires low latency network traffic, then there is the possibility that the introduction of a Service Mesh could increase latency. This is true for all Service Mesh’s, not specifically OSM. There is some debate as to whether the performance of the Envoy proxy, in particular, can add some latency. This is something for you to test and determine if it is an issue for your application. Envoy is used widely in this role, and so for most applications, it is unlikely to have a significant impact.