WTH are Azure Container Apps?

This week we saw Microsoft’s annual (or twice-annual) Ignite conference, where we saw lots of announcements about new and updated Azure technologies. You can see full details on all the announcements here. One of the stand-out announcements was a new service called Azure Container Apps. This service is described as “A serverless container service for running modern apps at scale”, so what does that mean. Let’s look beyond the marketing and figure out WTH Azure Container Apps are and why you might want to use them.

What are Azure Container Apps?

Azure Container Apps (or ACA for short) is a platform for running container-based applications as a PaaS service. Essentially it is a serverless/PaaS implementation of Kubernetes, similar to Googles Autopilot service. We already had Azure Container Instances that allowed you to run single containers (or small groups of containers) as a top-level service without managing the underlying orchestrator, nodes, etc. ACA takes this further and provides a platform for running whole multi-container applications on a serverless platform. What you get with ACA is:

- The ability to run any workload that can run in a container (and meets the service limitations, more on that later)

- The ability to run multiple containers inside the same environment

- Built-in Ingress service with HTTPS using Envoy

- Autoscaling using the built-in KEDA service

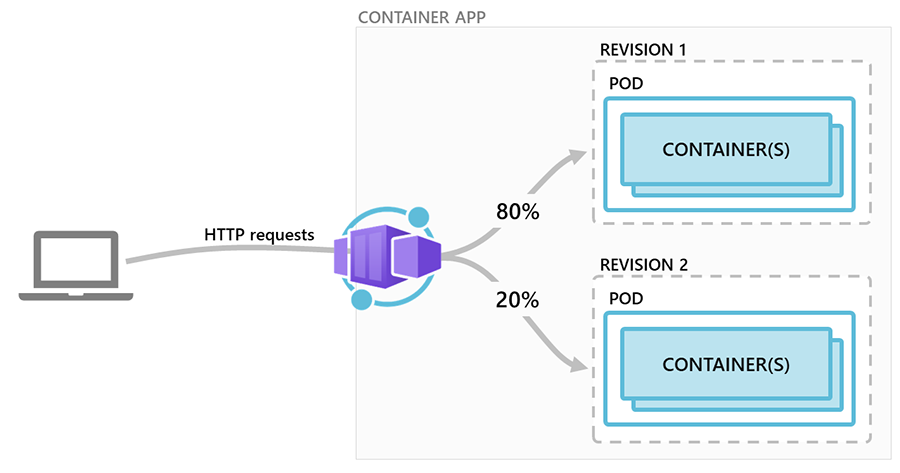

- Integrated traffic splitting for Blue/Green style deployments

- Built-in DAPR

- Secure Secret Storage

- Integration with Log Analytics

- Fully Managed Kubernetes platform with all updates and maintenance handled for you

ACA is designed to be a fully ready serverless orchestrator that you can drop your application into and run using the available services.

How do Azure Container Apps Work?

Core Concepts

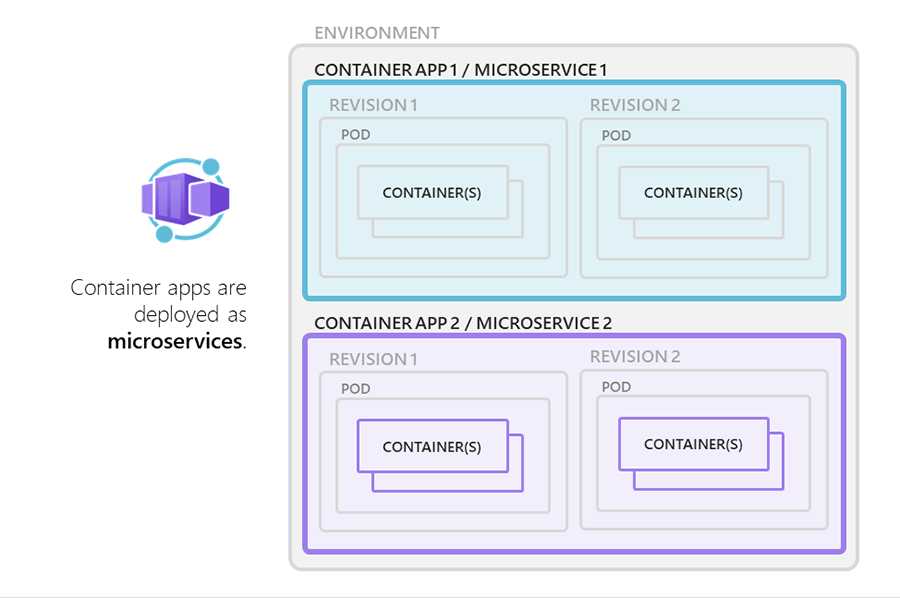

ACA has a few different concepts that you need to understand to be able to work with it. First, we have an environment. The environment is an instance of an ACA service and is essentially the same as a Kubernetes cluster, or maybe a namespace. You can deploy multiple container applications into an environment, and they will all be able to communicate with each other. If you were building a microservice-based application, you would deploy all your microservices into the same environment. If you need separation between applications, you would deploy them to different environments.

Next, we have Containers or Container Apps. These are what you deploy into your environment, and you can think of them as similar to Kubernetes Pods. Each container app can contain multiple containers, but this functions the same as containers in a single pod, and they all share the same life cycle, the same disk and can communicate directly with each other. Each service or microservice that makes up your application but has an independent lifecycle would be a separate container app in the same environment.

Finally, we have revisions. Revisions are immutable snapshots of your container application at a point in time. When you upgrade your container app to a new version, you create a new revision. This allows you to have the old and new versions running simultaneously and use the traffic management functionality to direct traffic to old or new versions of the application.

Revisions can be retired once they are no longer needed to stop them using resources in your environment.

Additional Services

Alongside your applications, there are several built-in services that you can utilise.

First, there is a built-in Ingress controller running Envoy. You can use this to expose your application to the outside world and automatically get a URL and an SSL certificate.

KEDA (Kubernetes Event-Driven Autoscaler) is also running in the cluster. KEDA allows you to automatically scale your app up and down (all the way to 0) based on HTTP concurrent requests, CPU or memory usage or any of KEDA’s event-driven scalers (Service Bus, SQL DB, Redis and more).

ACA environments are also configured to run DAPR (Distributed Application Runtime) out of the box, so you can have your application take advantage of this for state management, pub/sub, secret management, bindings and service discovery/invocation. This does require your application to be developed to work with DAPR.

ACA also includes built-in internal DNS service discovery. You can expose your container apps with “internal ingress” to allow apps in the same environment to communicate with each other.

Application Deployment

ACA resources are top-level Azure resources, so deployment is done through the standard Azure methods - ARM Template/Bicep, PowerShell, CLI as well as third-party tools such as Pulumi and Terraform (Terraform integration was not released at the time of writing this).

Cost

Container apps are billed in a similar way to Azure functions, so on a consumption basis. There is no base fee, and you only pay for what you use. There are three meters you are charged on. Prices are based on East US 2 and correct at the time of writing:

- Number of HTTP requests - the first 2 million are free and then $0.40 per million after that

- vCPU seconds - the amount of CPU consumed. There are 180,000 vCPU seconds (50 hours) included in the free grant and then charged at $0.000024 per second after that. If you don’t want to scale down to 0 when not in use, you can also keep a minimum capacity running at all times, and this is charged at a lower rate of $0.000003 per second when idle.

- Memory GiB seconds - the amount of memory used per second. The free grant is 360,000 GiB seconds, then $0.000003 after that (active or idle)

Why Would I Want To use Azure Container Apps?

The biggest draws for Container Apps are the serverless container orchestration and the usage-based pricing. By using this platform, you can do away with needing to manage Kubernetes clusters, which, even with AKS, took time and skill to do. You can let Microsoft manage the platform, handle patching and updates, platform security etc. and focus on building and deploying your application. With usage-based pricing, you can run your app without needing a minimum amount of hardware running at all times, assuming you can scale to 0 or at a very low cost if you need some idle instances.

Alongside this, you also get integration of key services, which Microsoft also manages. Built-in HTTPS ingress and KEDA autoscaling take away two key headaches of running your application on Kubernetes and avoids you needing to become an expert in Nginx, cert-manager and KEDA. If your application uses DAPR, you also get that built into the platform, so you can focus on implementing it in your application.

With revisions, you also get a nice way to handle updating applications and doing blue/green type deployments without the need to manage this yourself using something like Open Service Mesh or Istio. You can improve your deployment practice with quick testing and easy rollback without needing to implement this yourself.

What Issues does Azure Container Apps Have?

To gain the benefits of the serverless platform and have Microsoft manage this for you, you give up flexibility or at least a certain degree of it. If you’re deploying your app to ACA, you are buying into the whole service MS provides and how they have built it. If your application works with that, you are good, but if you rely on some services that don’t exist or want to do something different from how the service has been designed, you are out of luck.

With Kubernetes, you could deploy whatever you wanted. With ACA, you are signing up for the services ACA provides, such as using Envoy for ingress, KEDA for scaling, Log Analytics for monitoring etc. The service is also heavily focused around DAPR. You don’t have to use it, but you will want to if you want some of the advanced features. If you want integration with Key Vault for secrets, for example, or an external state provider and so on. It is not possible to integrate other application runtimes or Service Meshes.

The use of Azure Resources for deployment could also be a limiting factor for some. Your app needs to be deployed using ARM Templates/Bicep, PowerShell CLI etc. You can’t just take your existing Kubernetes YAML or Helm charts and deploy that. This may seem like a step backwards for some and is undoubtedly removing the cloud-agnostic approach that Kubernetes enabled.

ACA also doesn’t support all Kubernetes resources. You are just looking at Pods, Ingress and secrets. Under the hood, I am certain services, and deployments are being created, but you don’t see that. Perhaps more importantly, you cannot create Configmaps.

There are also some size limits on the apps you can deploy. Each container app must use one of the following hardware configurations:

| vCPUs | Memory in Gi |

|---|---|

| 0.5 | 1.0 |

| 1.0 | 2.0 |

| 1.5 | 3.0 |

| 2.0 | 4.0 |

If you need more resources than this or a different CPU to memory ratio, then ACA won’t work for you.

ACA currently only supports Linux container images, so no Windows support. ACA also does not support running any privileged containers and will generate an error if you try and use root permissions.

Finally, the obvious point, the service is currently in preview, so not production-ready yet.