Azure Spring Clean: Replacing Kubernetes Pod Security Policies with Azure Policy on AKS

This post is part of the Azure Spring Clean series being run by Joe Carlyle and Thomas Thornton, which is an event focussing on well managed Azure Tenants. Check out all the other articles at https://www.azurespringclean.com/.

If you want to lock down what sort of workloads can run in your Kubernetes clusters, then you have probably looked at using Pod Security Policies or PSPs. PSPs allow you to force your workloads to run in a certain way and disallow them from running if not. For example, you can enforce that pods must run as a non-root user, allow only specific volume mounts or deny access to the host network. PSP’s have been around for a while now and have been in preview in Azure AKS for a couple of years; however, they are not going to make it out of preview.

PSP as a concept is being deprecated by Kubernetes for various reasons, in favour of Open Policy Agent and Gatekeeper. This provides a much more flexible approach to Kubernetes security than the relatively rigid, pod only approach of PSP’s. In Azure and AKS, PSP’s will not leave preview and will be deprecated as of 20th 2021 (although this date keeps moving). PSP’s in AKS are being replaced by Azure Policy for AKS.

Azure Policy for AKS is an extension of the Azure Policy tooling to allow you to apply policies to workloads running inside your AKS cluster. Under the hood, this uses a managed version of Gatekeeper with policies defined using Open Policy Agent. In the rest of this article, we will look at how you can migrate from PSP to Azure Policy.

Defining Policies

Mapping PSP’s to Azure Policies

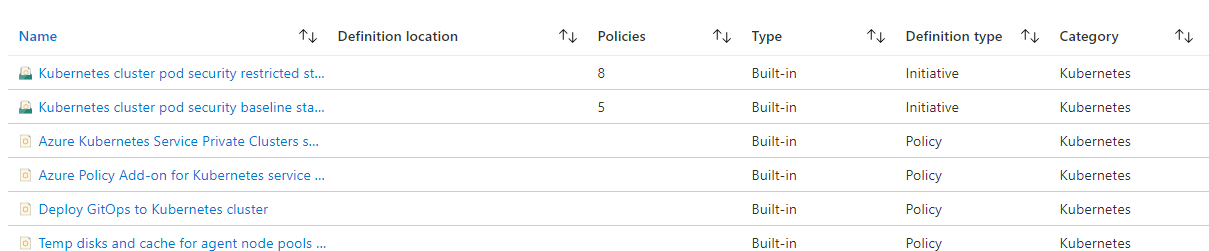

Before we can apply Azure Policy to our AKS cluster, we need to define the policies we want to use. We’re going to focus here on the capabilities provided to replicate PSP’s. There are actually several more AKS policies that provide features outside of PSP’s, such as restricting what container registries can be used for containers and so on. If you want to look at all the policies available for AKS, go to the Policies section in the Azure portal, go to definitions, then filter by category for “Kubernetes”. Note that at the moment, there is only support for built-in policies; you cannot create custom policies.

To migrate existing PSP’s over to Azure Policy, you will need to map the settings in the PSP over to the appropriate policy on Azure Policy. The table below provides a mapping between these to help you translate between them. Note that it is not always a one to one mapping, several of the Azure Policies have grouped together with multiple PSP settings, like Host Network and Host Ports, and run as user, group etc.

| Area | PSP Name | Azure Policy Name |

|---|---|---|

| Running of Privilaged Containers | privilaged | Kubernetes cluster should not allow privileged containers |

| Restricting escalation to root privileges | allowPrivilageExcalation | Kubernetes clusters should not allow container privilege escalation |

| Usage of host networking and ports | hostNetwork, hostPorts | Kubernetes cluster pods should only use approved host network and port range |

| Usage of host namespaces | hostPID, hostIPC | Kubernetes cluster containers should not share host process ID or host IPC namespace |

| Usage of the host filesystem | allowedHostPaths | Kubernetes cluster pod hostPath volumes should only use allowed host paths |

| Usage of the host filesystem | allowedHostPaths | Kubernetes cluster pod hostPath volumes should only use allowed host paths |

| The user and group IDs of the container | runAsUser, runAsGroup, supplementalGroups, fsGroup | Kubernetes cluster pods and containers should only run with approved user and group IDs |

| Usage of volume types | volumes | Kubernetes cluster pods should only use allowed volume types |

| Allow specific FlexVolume drivers | allowedFlexVolumes | Kubernetes cluster pod FlexVolume volumes should only use allowed drivers |

| Requiring the use of a read only root file system | readOnlyRootFilesystem | Kubernetes cluster containers should run with a read only root file system |

| Linux capabilities | defaultAddCapabilities, requiredDropCapabilities, allowedCapabilities | Kubernetes cluster containers should only use allowed capabilities |

| The Allowed Proc Mount types for the container | allowedProcMountTypes | Kubernetes cluster containers should only use allowed ProcMountType |

| The seccomp profile used by containers | annotations | Kubernetes cluster containers should only use allowed seccomp profiles |

| The SELinux context of the container | seLinux | Kubernetes cluster pods and containers should only use allowed SELinux options |

| The sysctl profile used by containers | forbiddenSysctls | Kubernetes cluster containers should not use forbidden sysctl interfaces |

| The AppArmor profile used by containers | annotations | Kubernetes cluster containers should only use allowed AppArmor profiles |

Creating Initiatives

It’s unlikely that you will want to assign these policies one at a time; more likely, you will want to create different “initiatives” for different security scenarios. Initiatives allow you to group policies together and provide default parameters for these policies to build a pre-defined configuration, much like a PSP for a specific workload. You are likely going to need multiple different initiatives for different types of workloads. For example, I have found that I need a less restrictive set of policies for running Nginx ingress controllers. In contrast, I want to be more restrictive for my application workloads, so I have two different initiatives defining my security posture for those workloads.

There are two built-in initiatives for PSP’s already in Azure Policy:

| Name | Description | Policies | Version |

|---|---|---|---|

| Kubernetes cluster pod security baseline standards for Linux-based workloads | This initiative includes the policies for the Kubernetes cluster pod security baseline standards. This policy is generally available for Kubernetes Service (AKS), and preview for AKS Engine and Azure Arc enabled Kubernetes. For instructions on using this policy, visit https://aka.ms/kubepolicydoc. | 5 | 1.1.1 |

| Kubernetes cluster pod security restricted standards for Linux-based workloads | This initiative includes the policies for the Kubernetes cluster pod security restricted standards. This policy is generally available for Kubernetes Service (AKS), and preview for AKS Engine and Azure Arc enabled Kubernetes. For instructions on using this policy, visit https://aka.ms/kubepolicydoc. | 8 | 2.1.1 |

You can look at how these initiatives and how they are defined to determine if they fit your workloads. If they do, you’re lucky, and you can just take those initiatives and use them. More likely, however, you will need to define some of your own custom initiatives. If you want to do this, I would suggest using one of the built-in initiatives as your starting point. If you go to the Azure Portal and locate them, you can click on the “Duplicate initiative” button. This will create a copy of the initiative, which you can then customise however you like.

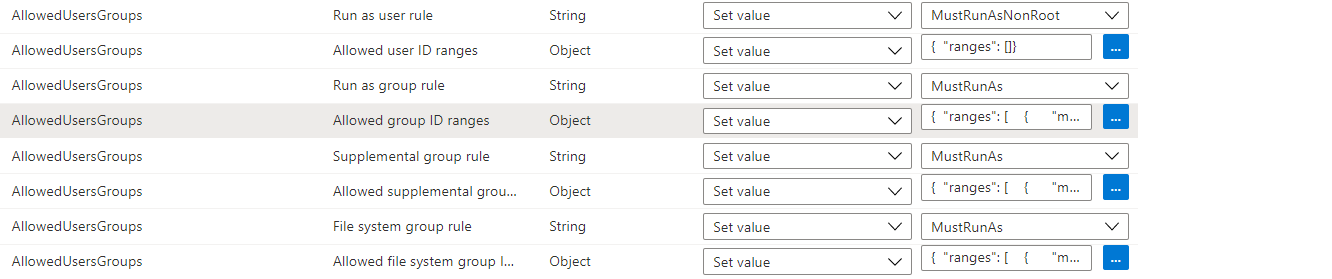

When you’re creating an initiative, there are two key areas to look at:

- Policies - which policies do you actually want to use in your initiative

- Policy Parameters - what values do you want to pass into the individual policies to define how they work. For example, what values do you want in the “Allows Group ID Ranges”. It is possible to allow the person assigning the initiative to specify all these values through initiative parameters; however, this is going to make your initiatives very complex and difficult to police in terms of what a clusters security posture is. You are better off defining each initiative as providing a specific security posture and have the person assigning pick an initiative.

Once you go through this process, you should have a set of initiatives you can then apply. To give you some examples, I have the following initiatives in one of my environments:

- Nginx Ingress PSP Initiative - Nginx Ingress needs some slightly elevated rights that I don’t want to give other applications, so I have a separate initiative that only gets applied to Nginx namespaces (see next section on how to do this)

- Prometheus PSP Initiative - Prometheus needs access to several host resources, which I want to deny for other applications, and so I have a specific initiative for this workload

- Standard Workload Restricted PSP - for the rest of my workloads which are relatively simple and don’t need any elevated rights, I have a policy that is restrictive and locks down most things

Assigning Initiatives

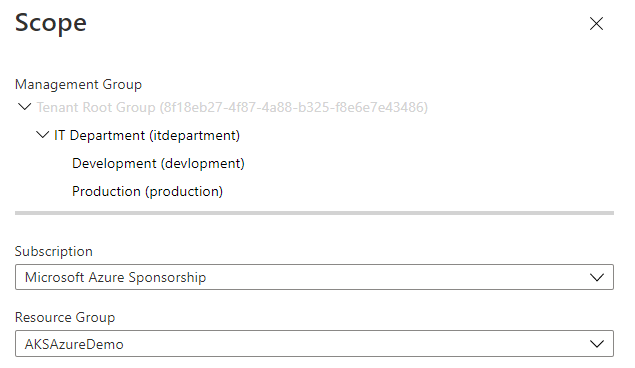

Now we have created the required initiatives, we need to assign them so they are used. There are two things we need to look at here.

First, we need to assign the initiatives so that they are actually applied to our AKS clusters. To do this, we click the “Assign” button on the initiative in the portal, and we then need to define the scope where this initiative is applied. This can be done at the management group, subscription or resource group level. Any AKS clusters under that scope will have this policy assigned. We can also add exceptions to not apply the policy to specific sub-resources in our scope.

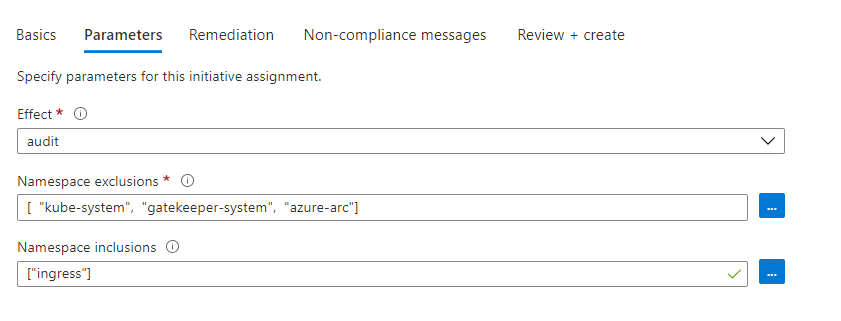

This will apply the policy to our cluster, but we want to actually scope some of our policies to specific namespaces, if you recall. For example, the Nginx Ingress policy only wants to be applied to our Nginx namespace; we don’t want it applied to the rest of our workloads as it is too permissive. To do this, we can look at the assignment’s Parameters tab, which allows us to define initiative parameters.

First, we can define what effect the policy has:

- Audit - this will log whether workloads are in compliance or not, but not do anything to stop them from running. This is an excellent place to start when applying policies so you can see what workloads are not in compliance and look to fix them without breaking anything

- Deny - this will prevent any workloads that do not meet the policy from running. It will not disable existing pods that are already running, but if they get restarted, they will not start

- Disabled - The policy does nothing

Once we select the effect, we then have two namespace parameters:

- Namespace Exclusions - we can use this to define which namespaces this policy does not apply to. If you have a policy that you want to apply to all namespaces, except a few, this is the one to use. This defaults to include some built-in namespaces that you should not apply policies to

- Namespace Inclusions - this defines which namespaces the initiative should be applied to and excludes everything else. Use this if you want a policy to be applied only to a few namespaces, like our Nginx Ingress policy. Here’s what that would look like.

Once we do this, our policies are being applied, but if you’ve not enabled Azure Policy on your cluster, nothing will happen until you do that.

Enabling Azure Policy in your AKS Cluster

If you have previously deployed your cluster either with PSP’s enabled or no security features, then Azure Policy will not be running in your cluster, so we need to enable that.

Firstly, if you had PSP’s running previously, you need to disable them. You cannot have PSP and Azure Policy running at the same time. To disable PSP’s you need to use the Azure CLI with this command:

az aks update \

--resource-group myResourceGroup \

--name myAKSCluster \

--disable-pod-security-policy

You can also delete any PSP’s in your cluster if you want to clean them up, but you don’t have to.

Once you disable PSP’s, you can then enable Azure Policy. This can be done through the portal by selecting your AKS cluster, going to policies and then clicking enable. Or you can do this through the CLI with the command below.

az aks enable-addons --addons azure-policy --name MyAKSCluster --resource-group MyResourceGroup

If you look at your cluster, you should now see a new “gatekeeper-system” namespace, with pods running in it. You will need to wait a while before things are fully running, and compliance data is reported to the Azure portal.

Review Compliance and Debug Policies

Once you have assigned your initiatives and enabled Azure Policy, you can review compliance data reported to the Azure Portal. This is particularly important if your policies are in Audit mode, so you can check whether they are working as expected before you turn on enforcement mode.

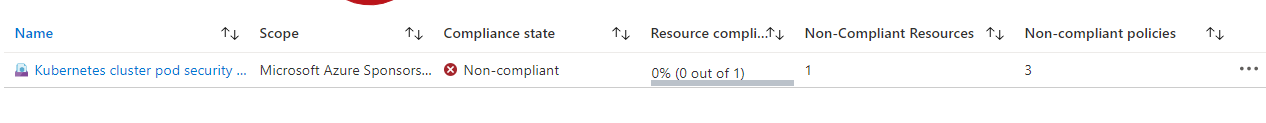

To view compliance data, you can use the Policy section of the Azure portal. Go to the “Compliance” section and then filter to see the initiatives you are interested in. This will give you a top-level view of whether your initiatives are compliant.

We can then click on the specific initiative, allowing us to see what specific policies are not in compliance.

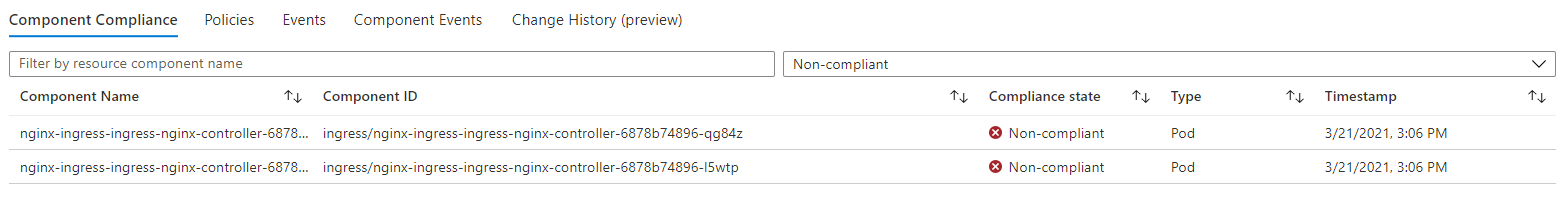

If you click on a specific policy, this will then show you which AKS clusters are not in compliance, and if you then click on the details for that, it will show you which specific pods are not in compliance.

Whilst you can see what pods are not in compliance, what you can’t see is what part of the policy they are not in compliance with. If you have a policy with multiple rules, like the user and group ID policy, you cannot tell which of these the container is having issues with. To diagnose this, you need to look at the details on the cluster itself.

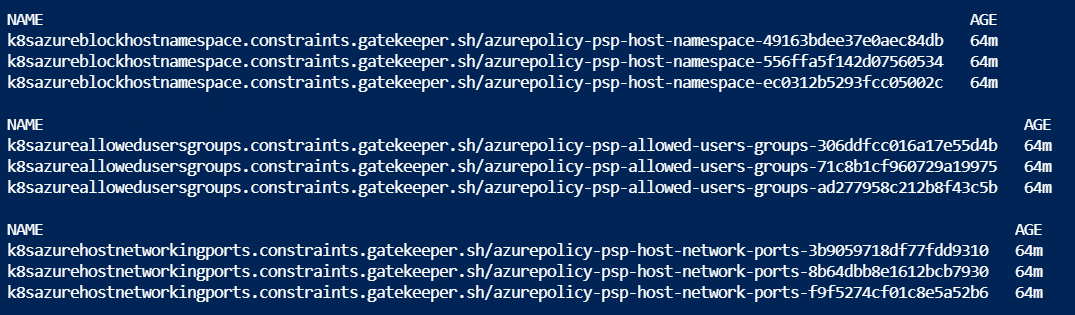

Gatekeeper stores all its data in a CRD object called “Constraints”, so if you run kubectl get constraints you should see a list of all the constraints running in your cluster.

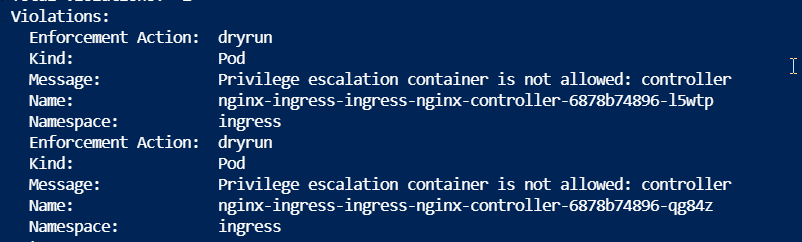

You will notice that there are multiple instances of the same constraint; I’m not overly clear at this point why this is the case. There is also no easy way to find the constraint you are looking for; you need to view them all to find the data you need. To find the policy that is being violated, you can describe a specific constraint, and you will see details about why the audit failed.

This should allow you to find which specific item is causing the violation and look to resolve it.

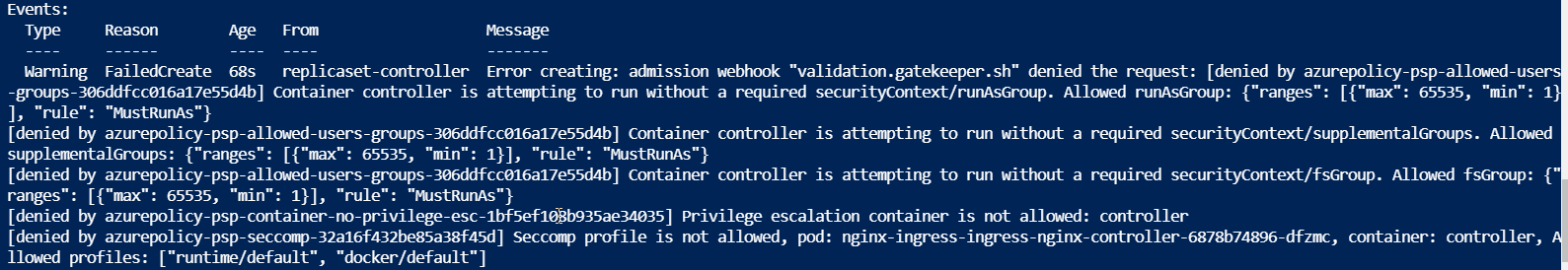

Once you have all your policies working as you expect, you can then edit your initiative assignment to “Deny” mode. This will prevent launching any containers that violate policy. You can see this happening in the logs of the replicaset.

Pod Security Contexts

I have noticed with Azure Policy that you really need to make sure your security context on your pod is correct, especially when using the audit functionality. With PSPs, the validation was done at deploy time, and so long as your pod complied with the policy, so didn’t try and run as root. For example, then it would pass. With Azure Policy, if your pod’s security context section doesn’t define the restrictions, then you may fail the audit, even if the pod is actually complying. So make sure that you define your security context in your container correctly.

spec:

securityContext:

runAsUser: 1000

runAsGroup: 3000

fsGroup: 2000

volumes:

- name: sec-ctx-vol

emptyDir: {}

containers:

- name: sec-ctx-demo

image: busybox

command: [ "sh", "-c", "sleep 1h" ]

volumeMounts:

- name: sec-ctx-vol

mountPath: /data/demo

securityContext:

allowPrivilegeEscalation: false