AKS, Azure AD Authentication and Automation

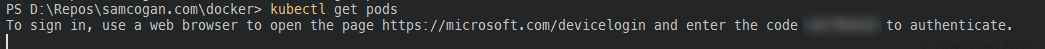

Azure Kubernetes Service (AKS) has supported Azure AD integration for a little while now. When you set this up, you can associate Azure AD users or groups with Kubernetes roles. When a user connects to the cluster for the first time, they are presented with a request to login into Azure AD.

This process works great for users who need to connect to the cluster. However, it turns out that Azure AD auth for AKS does not yet support service principals. So if you’re connecting to your AKS cluster as part of your CI/CD deployment or other automated processes, and attempting to get the cluster credentials you will find the response is just the same message seen above, to log in, which the service principal cannot handle. There is a feature request to resolve this issue here; however, there is no timeline on when this will be fixed.

So in the meantime, how do you connect to an AKS cluster that is using Azure AD auth as part of our automation?

Admin Credentials

Let us be clear before we start; this is a terrible idea for anything beyond a basic development cluster and should never be done in production. However, it does work around the issue.

When you use the “az aks get-credentials” command it is possible to bypass the Azure AD auth by specifying the –admin flag. This command gets you the cluster-admin credentials which are not managed by Azure AD. This process is intended for use when you first deploy the cluster, and you have not added any roles that allow Azure AD users in.

Using this flag, you can connect and retrieve the admin credentials that will work without Azure AD; however, this means your automation is running as the cluster-admin. As mentioned, this is a terrible idea in terms of security and least privileged access, and I do not recommend it, but if you’re looking for a quick and dirty solution, it will work.

Service Accounts

The proper way to solve this problem until we can use service principals is through the use of Kubernetes service accounts. Kubernetes has supported the creation of service accounts inside the cluster for as long as RBAC has been around. Commonly service accounts are used for applications running inside your cluster that need to talk to the Kubernetes API, but there is no reason why external applications cannot use these for authentication.

API Restrictions

It is now possible to lock down what IPs can access you AKS API. If you have used this feature, you will need to make sure you whitelist the IPs of wherever your automation process is running from so it can authenticate.

Creating Service Accounts

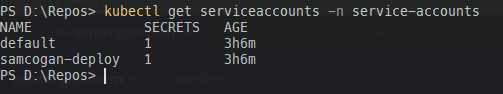

We can create a service account using Kubectl, and the create command or a YAML definition. One thing to bear in mind is that service accounts do sit inside a namespace. If you’re creating a service account for a specific deployment, then you may want to create it inside the same namespace, but if you’re looking to be more generic, then you may want to create a namespace to hold your service accounts.

kubectl create namespace service-accounts

Then we can create the service account itself:

kubectl create serviceaccount samcogan-deploy

Currently, the service account we have created doesn’t have any rights to do anything in the cluster, so we need to change that. For my deployments I am using Helm to deploy the Kubernetes resources, so most of the work is done by the Helm service account and not my deployment account (until Helm 3 solves this at least). Given this, my service account only needs enough rights to be able to call Helm. To enable this, we create a ClusterRole granting the required permissions. I am creating a ClusterRole rather than just a role as I want to re-use these permissions for multiple namespaces and different service accounts.

We create the ClusterRole using a YAML file.

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: deployment-services

rules:

- apiGroups: [""]

resources: ["pods/portforward"]

verbs: ["create"]

- apiGroups: [""]

resources: ["pods"]

verbs: ["list", "get"]

We save this as ClusterRole.yaml and apply it

kubectl apply -f ClusterRole.yaml

This ClusterRole grants the rights to list and get pods, and create port forwards, which is the minimum required to use Helm.

At present, this ClusterRole exists, but it is not used by anything. We need to create a binding to assign this role to our service account. Here we are using a RoleBinding rather than a ClusterRoleBinding because we only want this service account to have these rights on a specific namespace, not the whole cluster. If we want to grant the same rights to a second namespace, we can create a second RoleBinding.

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: deployment

namespace: samcogan-dev

subjects:

- kind: ServiceAccount

name: samcogan-deploy

namespace: service-accounts

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: deployment-services

kubectl apply -f Rolebinding.yaml

Now our service account has the required rights, but only on the samcogan-dev namespace.

Deploying with the Service Account

Now we have a working service account we need to configure out automation to use it. How you do this varies depending on what tool you are using. In this example, we will look at using Azure DevOps.

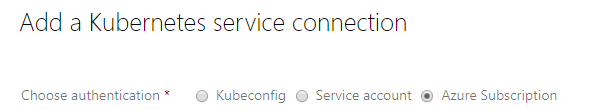

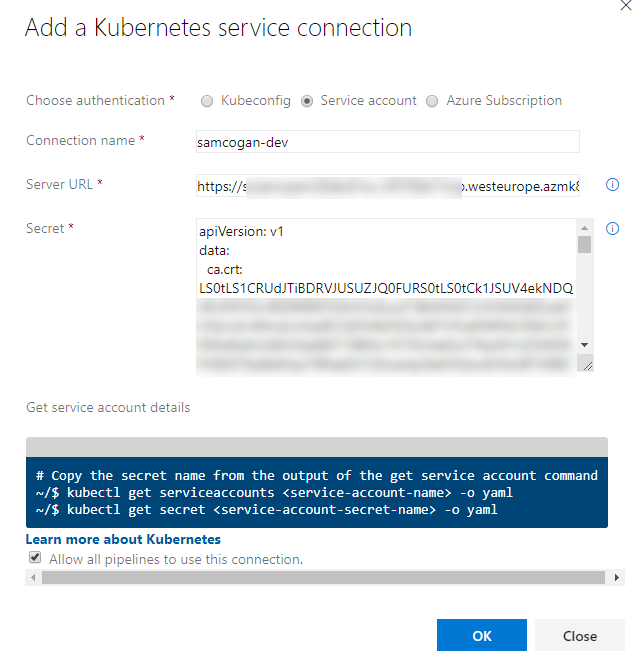

In Azure DevOps we need to create a new service connection, this can be found under “project settings” and then “service connections”. If we click on “New Service Connection” we get a list of types, one of which is Kubernetes. Select this presents a window which has 3 options for how to connect.

We need to select “Service account”. This will then ask for 3 items - name, server URL and secret.

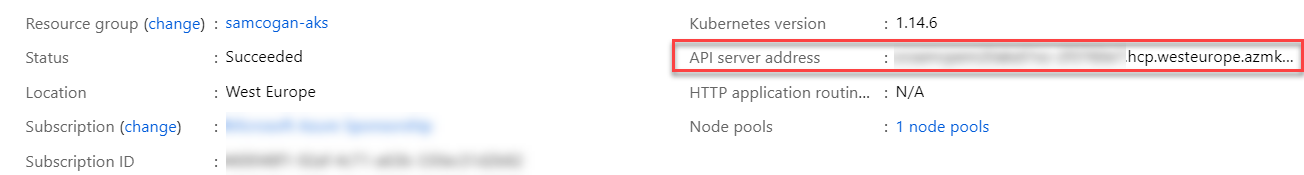

The name is up to you; it is free text that allows you to identify the connection quickly

The Server URL is the URL of your AKS Instance; you can find this by looking at the AKS instance in the Azure portal.

You will need to append https:// to the front of the URL.

Finally, the secret is the keys Azure DevOps needs to connect using the service account. We obtain this by going back to the command line and first running the command to retrieve a list of the secrets in the same namespace as your service account.

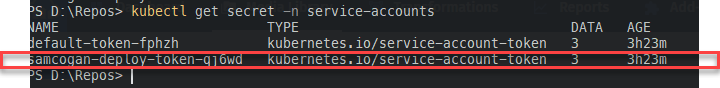

kubectl get secret -n service-accounts

This command lists all the secrets, one of which should the same name as your service account with some letters appended.

Make a note of this name and then run the following:

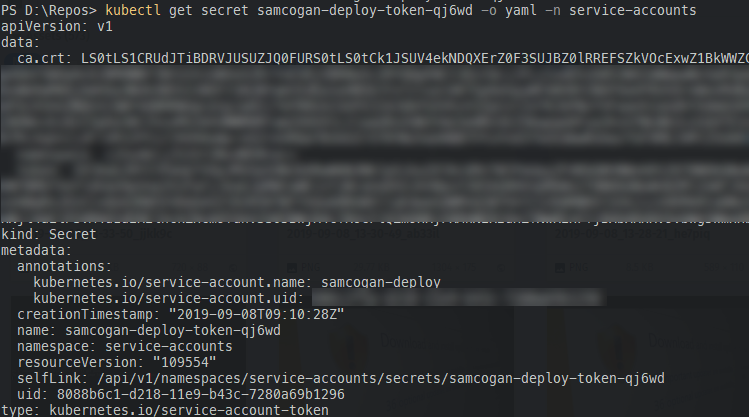

kubectl get secret -n <name of secret> -o yaml -n service-accounts

This command outputs the secret in YAML format.

Select all of the output from this command and copy it. Paste the text into the secret field. Your Service Connection should now look like this:

Click OK to save it.

Using the Service Connection

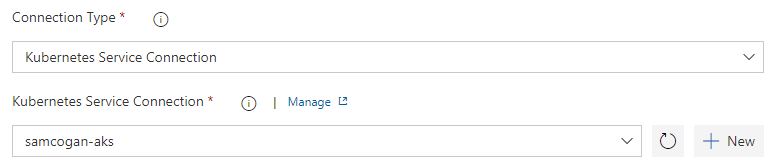

Now that you have created the Service Connection, you can use it in any task in your build and release pipelines that need to connect to Kubernetes. The most common uses are in the Kubernetes and Helm tasks.

Other automation tools should offer similar techniques for connecting to Kubernetes; if that is not the case, then you would need to look at authentication via the command line by passing a certificate or bearer token. For more details on this, see the Kubernetes documentation.