Accessing a Private AKS Cluster with Additional Private Endpoints

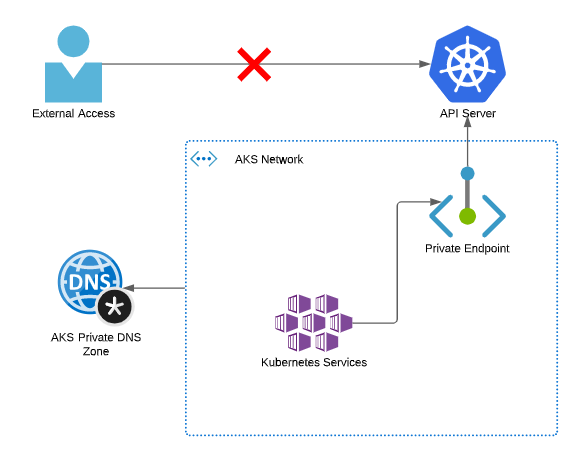

When creating an AKS cluster, you have the option to make it a private cluster. When you do this, it means the managed API server created for you only has internal IP addresses, and your Kubernetes nodes talk to it over your vNet. The API server is no longer exposed over the internet as it is with the standard AKS deployment. This is an excellent option if you are concerned about the security of your cluster and the API server and want to lock it down as much as possible, or if you want to make sure the traffic between your nodes and the API server doesn’t transit the internet. However, it does come with a downside. It makes it much more difficult for you to connect to the API server to manage the cluster or run automation against it. This is particularly an issue if your AKS cluster is on an isolated network and there is no direct connectivity from your management network. The Microsoft docs on private clusters recommend some possible approaches to deal with this:

- Deploy a VM in the same network as AKS and use this as a remote management server

- Use virtual network peering to connect your management network to the AKS network

- Use Express Route or VPN to connect your on-premises network to the AKS network

- Use the AKS command invoke feature to run commands remotely on your AKS cluster

All of these options can work, but they might not fit your use case. If you’re intentionally running your AKS cluster on an isolated network, you might not want to have full connectivity between it and your management network, so peering or ExpressRoute/VPN are out. Deploying a VM in the same network is possible, but what if you have lots of clusters in different locations and networks? You don’t want many management VM’s, especially if you’re using this for automation. Command Invoke is a good solution if you need to run the odd command, but if you need to run more complex operations or use some automation services, it’s not feasible.

There is, however, another option that isn’t currently in the docs, but that might provide a more straightforward approach, and that is using additional Private Endpoints.

AKS Private Endpoints

When you create a private AKS cluster, you are creating a Private Endpoint that injects the API server into your virtual network. If you’re not familiar with Private Link, I recommend reading my article on this for a quick introduction.

The private endpoint means that there is now a private IP for the API server in your virtual network. Further Private DNS configuration with the private endpoint means that you can talk to the API server using its regular DNS name, and this is then translated into using the private IP.

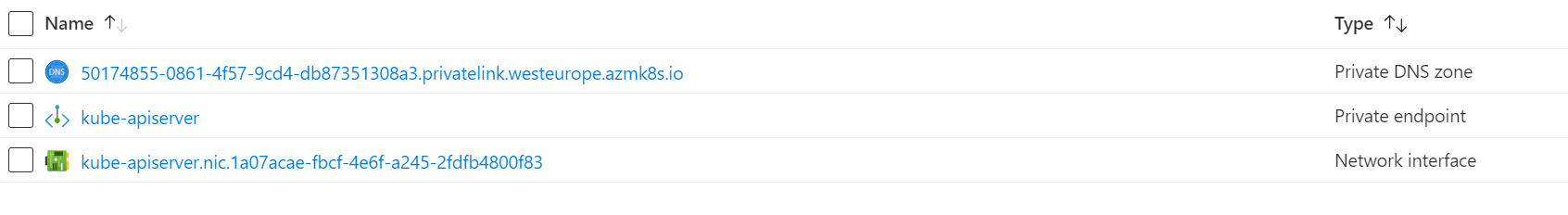

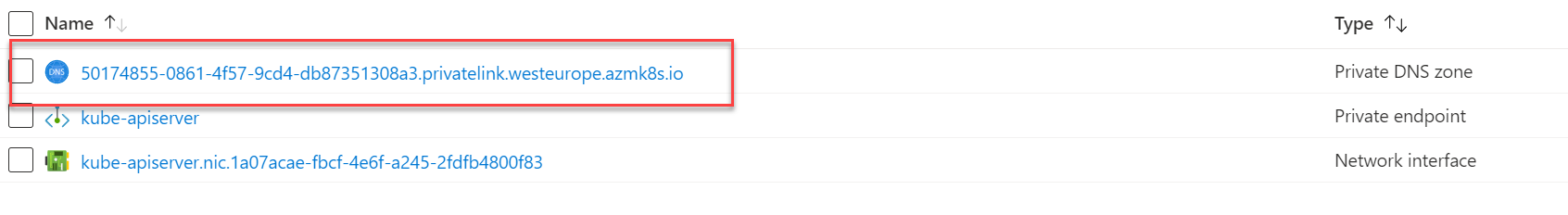

Unlike other services, this Private Endpoint is created as part of the AKS deployment, so you don’t create it separately, but if you look in your AKS node resource group, you will see the Private Endpoint, Network Interface and DNS zone.

Additional AKS Private Endpoints

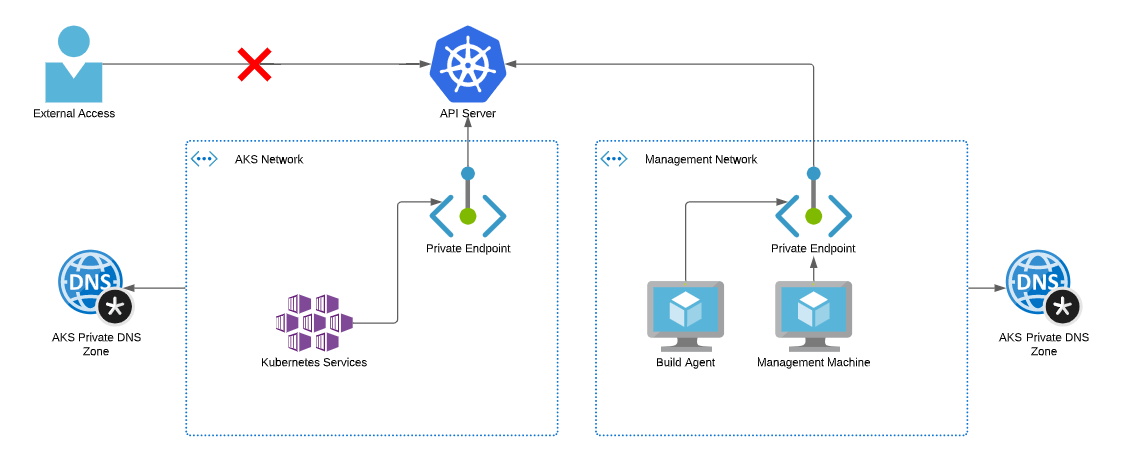

If you’ve used Private Endpoints with any other services (SQL, Storage, Web Apps etc.), you’ll know that you can create multiple Private Endpoints for these, they just need to be attached to different virtual networks. This allows you to connect to the resources from different networks. You can do the same with the AKS API server. In the docs, the creation of the Private Endpoint is wrapped up as part of the cluster deployment process. Still, nothing stops you from using the normal Private Endpoint creation process to create additional endpoints that will inject the API server into other vNets. By doing this, you can inject the API server into your management network, allowing your users or automation to access this using a Private IP whilst maintaining the private access to the service. This means you don’t need to peer your network and have full end to end network connectivity between the two networks; you can just talk to the API server (see caveat below).

A Warning on Port Forwarding

I mentioned earlier that this was an excellent approach to avoid peering networks and having full connectivity, and only providing access to the API server. There is a caveat to this that you need to be aware of, which is Kubernetes Port Forwarding. Using port forwarding, you can forward a port on your machine to one in the Kubernetes cluster, establishing network connectivity between the two on those ports, rather than just the API server. If this is a concern and you want to disable it, you should ensure that the Kubernetes RBAC roles you assign to users do not allow this permission.

Creating the Private Endpoint

To create the Private Endpoint, we will go through the Private Endpoint creation process rather than going through AKS as we did when creating the cluster. We just need to provide the details of the service we want to connect to and the network to which we want to join the service. We will go into more detail for one tricky area around DNS. In this example, I will use Azure Private DNS, you can use your own DNS servers if you want, but this is a more complex setup. If you want to do this quickly and easily, I recommend using Azure DNS.

Azure Portal

To create the Private Endpoint in the portal, go to “create”, and search for Private Endpoint and click create. Select the resource group, region, and name for your private endpoint in the first window. You must create the Private Endpoint in the same region as the vNet you want to connect to (this is your management vNet, not your AKS one).

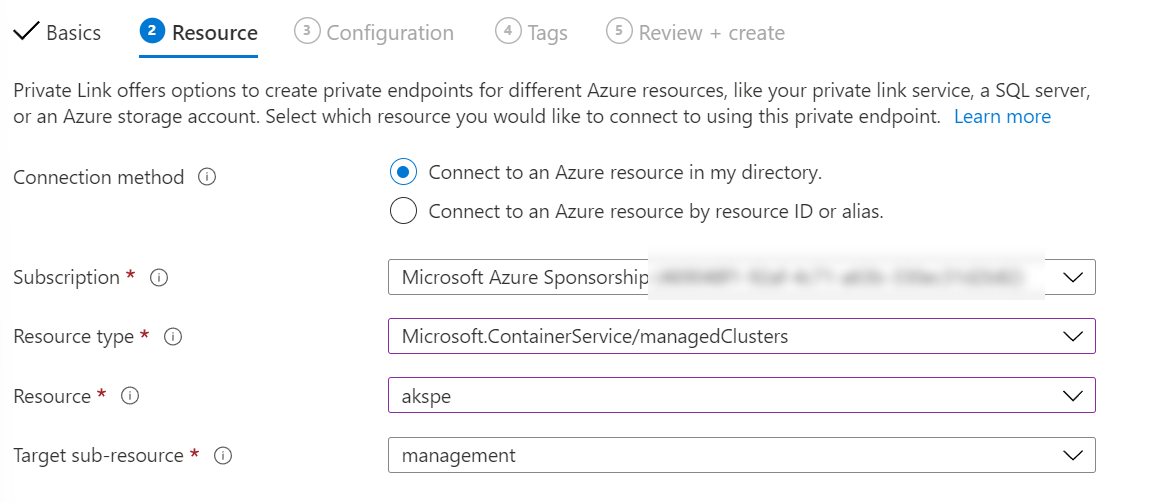

On the next page, select the subscription for your AKS cluster, set the type to “Microsoft.ContainerService/managedClusters”, and then find the AKS cluster you want to connect to. The subresource will select itself as there is only one option.

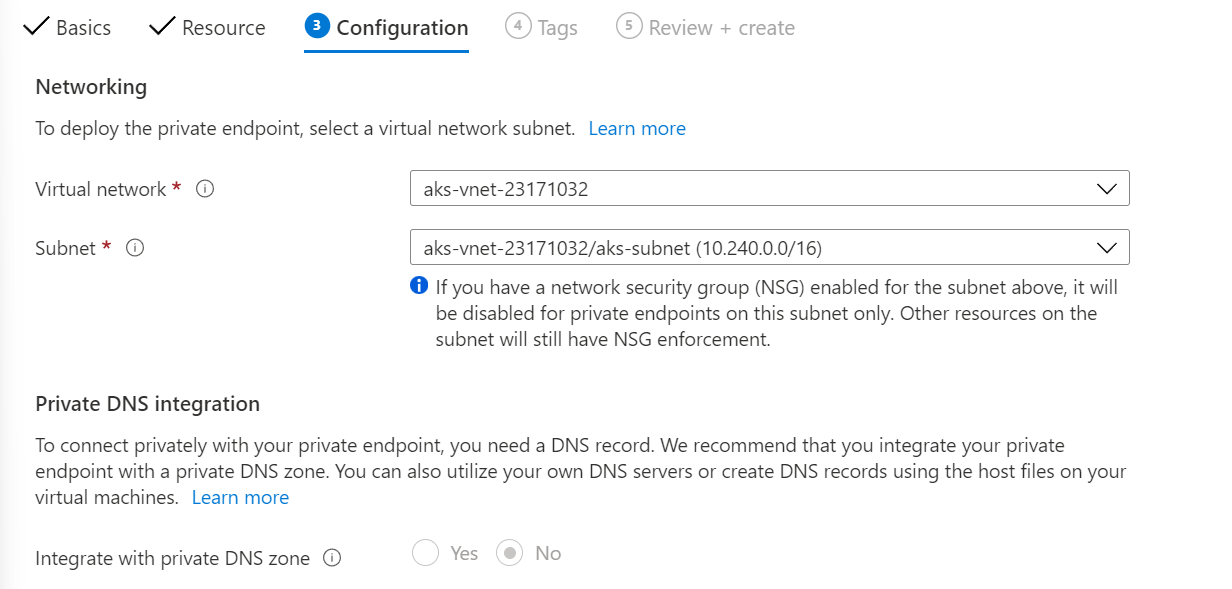

Select the virtual network and subnet you want the Private Endpoint connected to on the next page. You will notice that the DNS option is greyed out. This is OK, we will deal with this in a moment.

Finally, complete any tags you want to add, then create the resource.

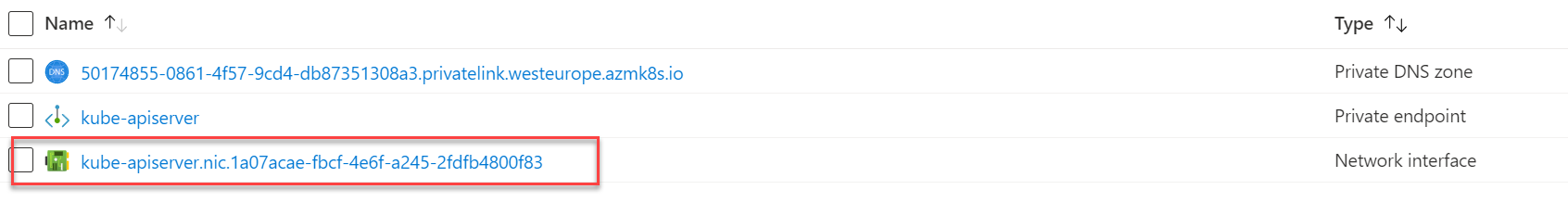

As mentioned, unlike with other resources, for AKS, the Private Endpoint wizard will not create the Azure Private DNS zone. The DNS creation part is tied up in the actual AKS creation process, so we will need to create this manually. Go back to your AKS node resource group and look for the Private DNS zone created there. Copy the name. In my example, the DNS zone name is “50174855-0861-4f57-9cd4-db87351308a3.privatelink.westeurope.azmk8s.io”.

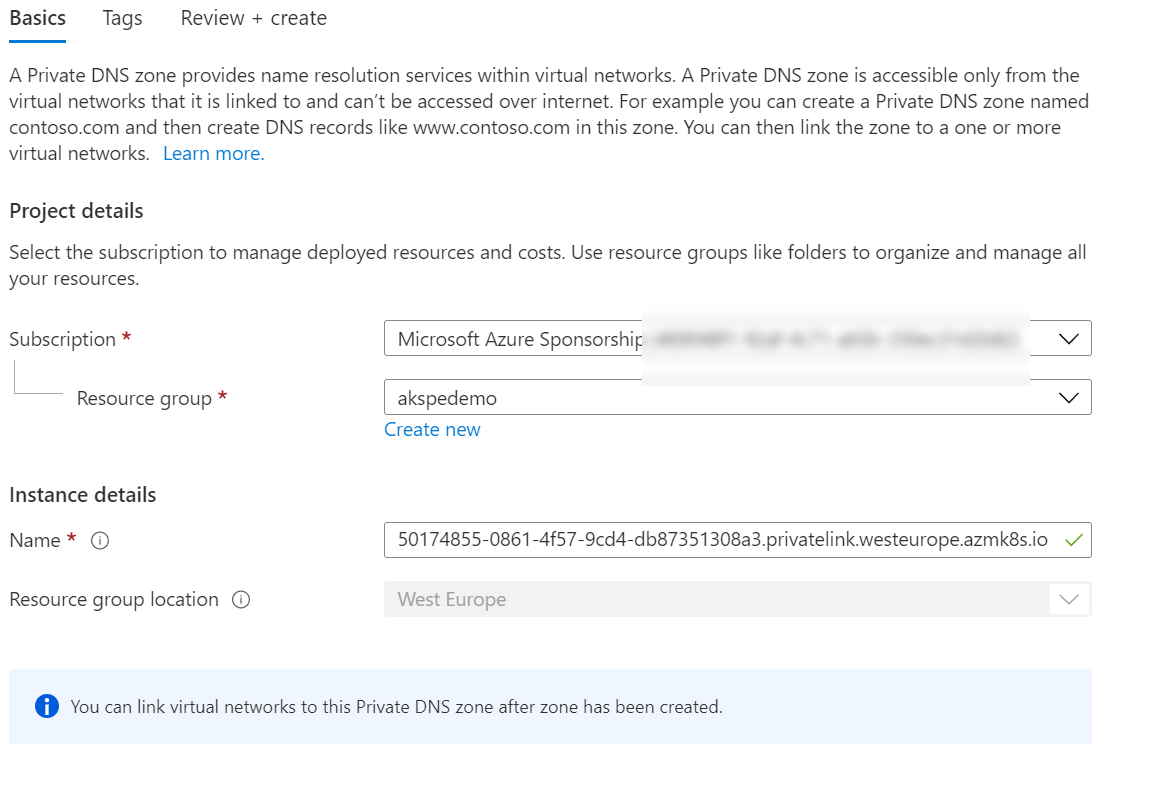

Go back to the resource group you deployed the Private Endpoint into, click “create, " and search for Private DNS Zone. Click create on this. In the name, paste the name of the DNS zone you copied from the AKS node resource group.

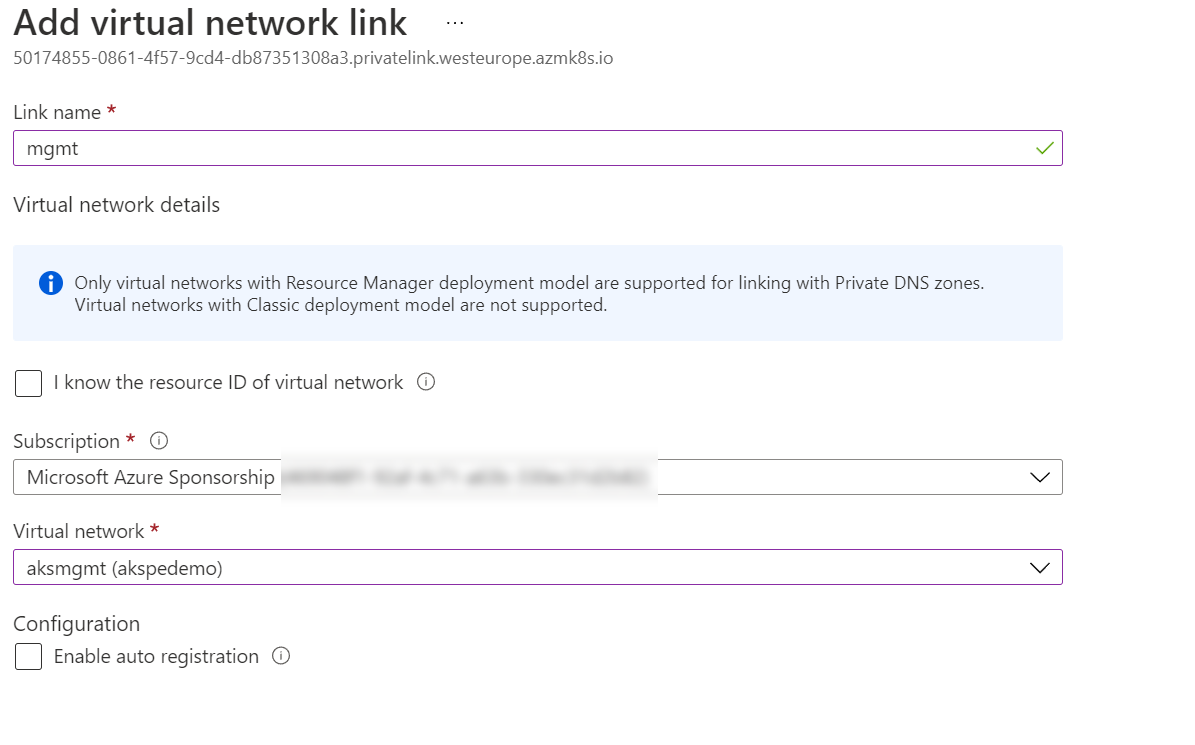

Add any tags you want, then create the Zone. Once created, open up the DNS zone and go to “Virtual Network Links”. Click “Add” and then point it at your management network.

Click OK to add the link.

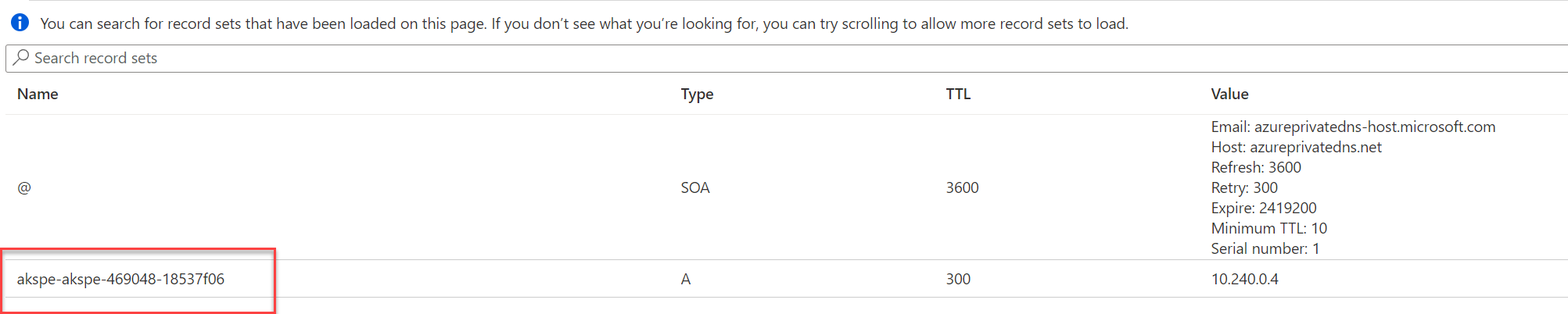

Finally, we need to create the DNS record for the actual cluster. Go back to your AKS node subnet, find the DNS zone and open it up. You should see a DNS record for the cluster, copy the name of the record (don’t worry about the IP).

Back in your management resource group, find the network interface associated with your Private Endpoint and click on this.

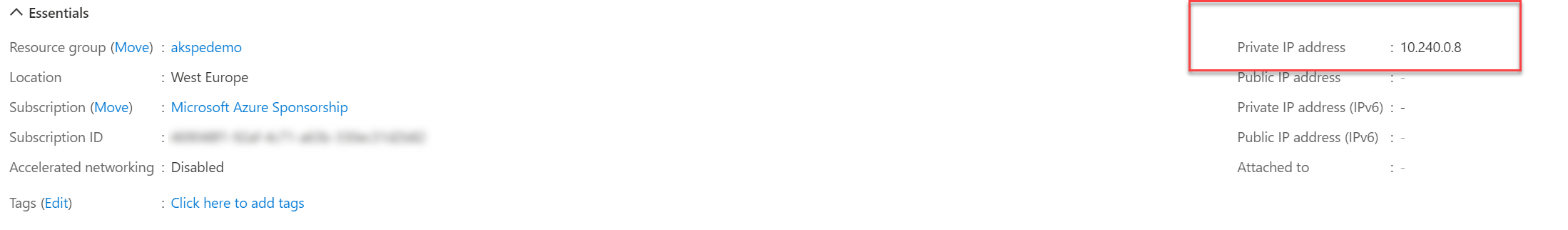

On the page that opens, find the “Private IP address” and note this.

Go back to the DNS zone you created, click the new Record Set button. Paste the name you copied from the AKS zone into the name box and the IP you copied from the mgmt Private Endpoint network interface into the IP address box. Set the type to “A Record”, click OK to add the record.

Your Private Endpoint is now set up. You can use the az aks get-credentials command as usual on a machine on the management vNet, and you will then be able to connect. Remember, you are using the standard AKS cluster name. You don’t need to remember or use the private link names we created; this is all handled for you.

Azure CLI

Create Private Endpoint

az network private-endpoint create --connection-name aks-mgmt-pe --name aks-mgmt-pe \

--private-connection-resource-id /subscriptions/<subscription ID>/resourcegroups/<AKS resource group>/providers/Microsoft.ContainerService/managedClusters/<AKS Cluster Name> \

--resource-group <mgmt resource group> --vnet-name <mgmt vnet name> --subnet <subnet for PE in mgmt vNet> --group-id management

Create and Link Private DNS Zone

az network private-dns zone create \

--resource-group akspedemo \

--name "<DNS Zone name copied from cluster resource group>"

az network private-dns link vnet create \

--resource-group akspedemo \

--zone-name "<DNS Zone name copied from cluster resource group>" \

--name akspedemo-link \

--virtual-network aksmgmt \

--registration-enabled false

Create DNS Record for Cluster

az network private-dns record-set a add-record --resource-group akspedemo \

--zone-name "<DNS Zone name copied from cluster resource group>" \

--record-set-name "<record name copied from cluster zone>" --ipv4-address <IP Address copied from PE NIC>

Bicep

param privateDnsZoneName string

param privateDnsRecordName string

param privateDnsRecordIP string

param name string

param location string

param vnetId string

param subnetName string

param aksClusterId string

resource privateEndpoint 'Microsoft.Network/privateEndpoints@2020-06-01' = {

name: '${name}-plink'

location: location

properties: {

subnet: {

id: '${vnetId}/subnets/${subnetName}'

}

privateLinkServiceConnections: [

{

name: '${name}-plink'

properties: {

privateLinkServiceId: aksClusterId

groupIds: [

'management'

]

}

}

]

}

}

resource privateDns 'Microsoft.Network/privateDnsZones@2020-06-01' = {

name: privateDnsZoneName

location: location

}

resource vnetLink 'Microsoft.Network/privateDnsZones/virtualNetworkLinks@2020-06-01' = {

name: '${privateDnsZoneName}/${name}-link'

location: location

properties: {

registrationEnabled: false

virtualNetwork: {

id: vnetId

}

}

dependsOn: [

privateDns

]

}

resource aRecord 'Microsoft.Network/privateDnsZones/A@2020-06-01' = {

name: privateDnsRecordName

parent: privateDns

properties:{

aRecords:[

{

ipv4Address: privateDnsRecordIP

}

]

}

}